#!/usr/bin/env python

# coding: utf-8

# In[30]:

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import DataLoader

import torchvision

import torchvision.transforms as transforms

# In[31]:

import visdom

vis = visdom.Visdom()

vis.close(env="main")

# In[32]:

def value_tracker(value_plot, value, num):

'''num, loss_value, are Tensor'''

vis.line(X=num,

Y=value,

win = value_plot,

update='append'

)

# In[33]:

#device = 'cuda' if torch.cuda.is_available() else 'cpu'

device = 'cpu'

print(device)

torch.manual_seed(2020)

if device =='cuda':

torch.cuda.manual_seed_all(2020)

# In[34]:

train_data_mean = [0.5, 0.5, 0.5]

train_data_std = [0.5, 0.5, 0.5]

# In[35]:

transform_train = transforms.Compose([

transforms.RandomCrop(16, padding=4),

transforms.ToTensor(),

#transforms.Normalize(train_data_mean, train_data_std)

])

transform_test = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(train_data_mean, train_data_std)

])

trainset = torchvision.datasets.ImageFolder(root='C:/Users/beomseokpark/Desktop/CNN/train_data_processed',

transform=transform_train)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=128,

shuffle=True, num_workers=0)

testset = torchvision.datasets.ImageFolder(root='C:/Users/beomseokpark/Desktop/CNN/test_data_processed',

transform=transform_test)

testloader = torch.utils.data.DataLoader(testset, batch_size=128,

shuffle=False, num_workers=0)

# In[36]:

import torchvision.models.resnet as resnet

# In[37]:

conv1x1=resnet.conv1x1

Bottleneck = resnet.Bottleneck

BasicBlock= resnet.BasicBlock

# In[38]:

class ResNet(nn.Module):

def __init__(self, block, layers, num_classes=1000, zero_init_residual=False):

super(ResNet, self).__init__()

self.inplanes = 16

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1,

bias=False)

self.bn1 = nn.BatchNorm2d(16)

self.relu = nn.ReLU(inplace=True)

#self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 16, layers[0], stride=1)

self.layer2 = self._make_layer(block, 32, layers[1], stride=1)

self.layer3 = self._make_layer(block, 64, layers[2], stride=2)

self.layer4 = self._make_layer(block, 128, layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(128 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck):

nn.init.constant_(m.bn3.weight, 0)

elif isinstance(m, BasicBlock):

nn.init.constant_(m.bn2.weight, 0)

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample))

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

#x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

# In[39]:

resnet18 = ResNet(resnet.BasicBlock, [2, 2, 2, 2], 4, True).to(device)

resnet50 = ResNet(resnet.Bottleneck, [3, 4, 6, 3], 4, True).to(device)

# In[40]:

criterion = nn.CrossEntropyLoss().to(device)

#optimizer = torch.optim.SGD(resnet50.parameters(), lr = 0.005, momentum = 0.9, weight_decay=5e-4)#default = lr 0.1

optimizer = torch.optim.Adam(resnet50.parameters(), lr=0.05)

lr_sche = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=1)#default = gamma 0.5

# In[41]:

loss_plt = vis.line(Y=torch.Tensor(1).zero_(),opts=dict(title='loss_tracker', legend=['loss'], showlegend=True))

acc_plt = vis.line(Y=torch.Tensor(1).zero_(),opts=dict(title='Accuracy', legend=['Acc'], showlegend=True))

# In[42]:

def acc_check(net, test_set, epoch, save=1):

correct = 0

total = 0

with torch.no_grad():

for data in test_set:

images, labels = data

images = images.to(device)

labels = labels.to(device)

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

acc = (100 * correct / total)

print('Accuracy of the network on test images: %d %%' % acc)

if save:

torch.save(net.state_dict(), "C:/Users/beomseokpark/Desktop/CNN/model/ResNet_epoch_{}_acc_{}.pth".format(epoch, int(acc)))

return acc

# In[43]:

print(len(trainloader))

epochs = 50

for epoch in range(epochs):

running_loss = 0.0

lr_sche.step()

for i, data in enumerate(trainloader, 0):

inputs, labels = data

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

outputs = resnet50(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 30 == 29:

value_tracker(loss_plt, torch.Tensor([running_loss/30]), torch.Tensor([i + epoch*len(trainloader) ]))

print('[%d, %5d] loss: %.3f' %

(epoch + 1, i + 1, running_loss / 30))

running_loss = 0.0

acc = acc_check(resnet50, testloader, epoch, save=1)

value_tracker(acc_plt, torch.Tensor([acc]), torch.Tensor([epoch]))

print('Finished Training')

# In[ ]:

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

images = images.to(device)

labels = labels.to(device)

outputs = resnet50(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on test images: %d %%' % (

100 * correct / total))

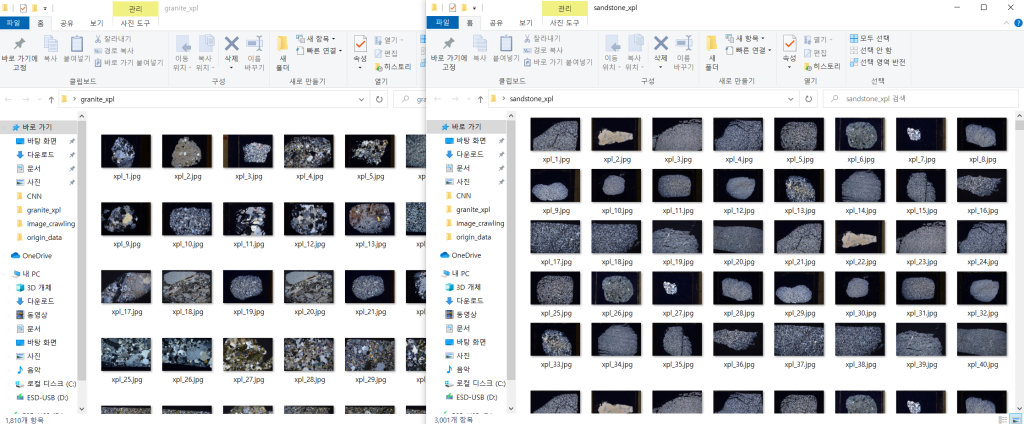

다음과 같은 암석 박편 데이터셋을 수집하여 4개의 클래스로 구별하는 코드를 ResNet으로 구현해보았는데, 정확도가 너무 나오지 않네요.

학습이 잘 되지 않는데 데이터셋이 학습시키기 어려운 것인가요?

이 정도 난이도의 데이터셋 같은 경우 어느정도의 정확도가 나와야 정상인지 궁금합니다.